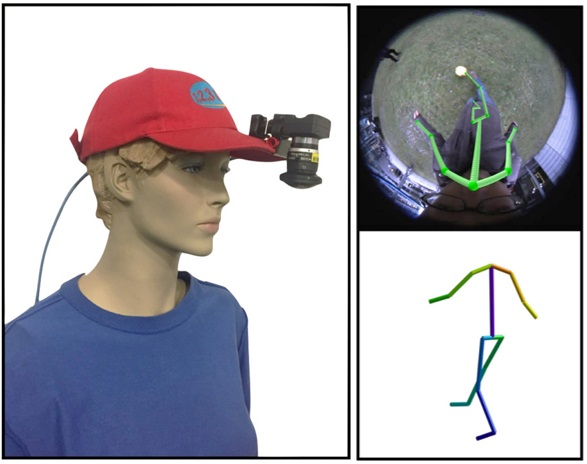

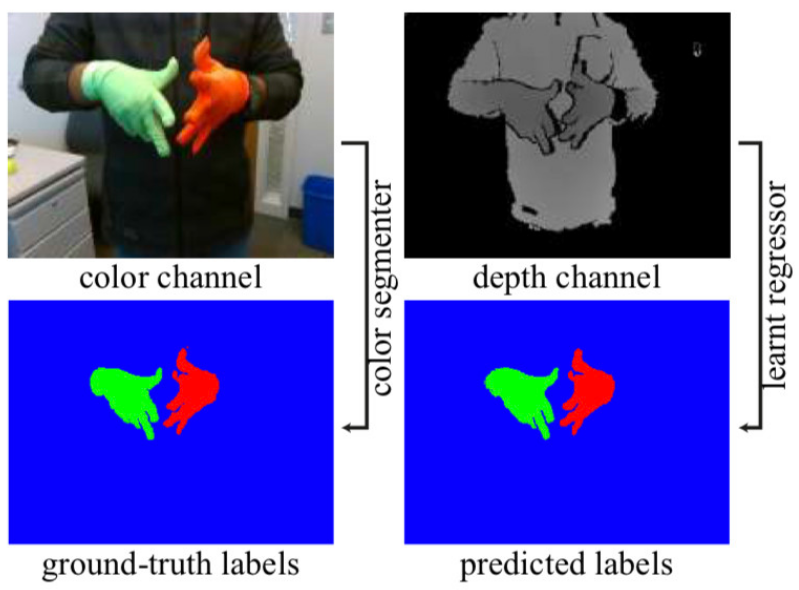

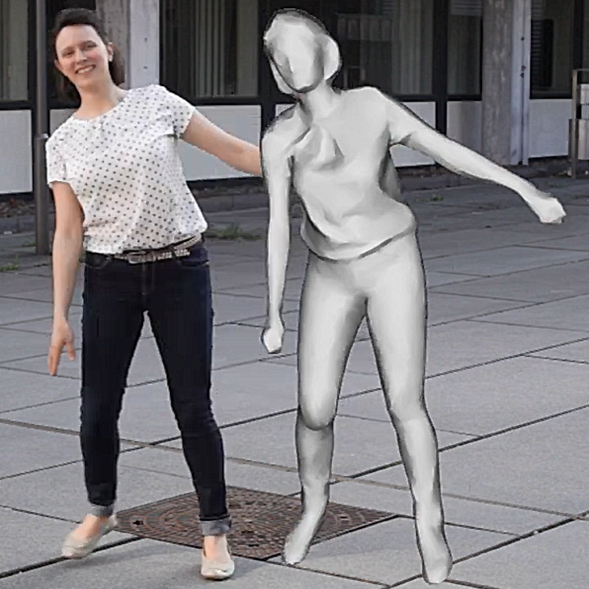

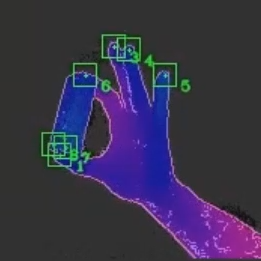

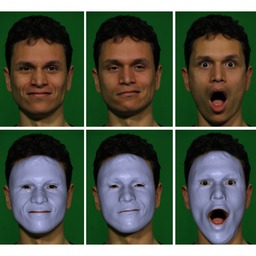

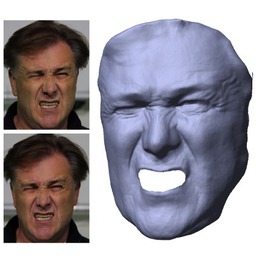

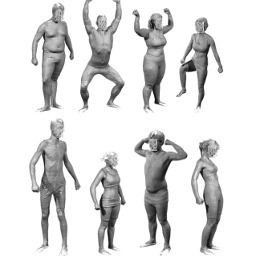

GVVPerfCapEva, is a hub to access a wide range of human shape and performance capture datasets from the Graphics, Vision, and Video and partner research groups at MPI for Informatics and elsewhere. These datasets provide an opportunity to enable further research in different fields such as full body performance capture, facial performance capture, or hand and finger performance capture.

The datasets span different sensor modalities such as depth cameras, multi-view video, optical markers, and inertial sensors. For some datasets, external results can be uploaded and compared to previous approaches.

License: Please see the individual dataset pages for details on license/restrictions. In general, you are required to cite the respective publication(s) if you use that dataset.

Supported by ERC Starting Grant CapReal |

|

Affiliated to |

|